A Music Quiz Platform, Built in a Week

We gave ourselves one week to test a simple but uncomfortable idea: what happens if you drop manual coding entirely and rely solely on agentic AI to ship a real product?

The goal wasn’t polish, scale, or product–market fit. The constraint we cared about was time. Our bet was that removing manual implementation work would force a different delivery dynamic — faster decisions, fewer abstractions, and tighter feedback loops.

To make the experiment concrete, we needed something real to build and launch. We chose Music Quizzer, a music quiz platform, with a deliberately pragmatic product goal:

- Start a quiz in 30 seconds — choose a pre-built quiz or create your own and let participants join instantly.

That product goal wasn’t the point — it was the proving ground. The real question was whether agentic coding could carry an end-to-end delivery effort under real constraints; no "vibe-coding" frenzy, but through a production-grade delivery loop including controlled changes and deterministic promotion between environments.

What follows is a write-up of that experiment: what we built, how we worked, and what changed when AI was responsible for coding.

What we shipped in a single week

Not a half-baked wizard of Oz prototype. But, a functioning, end-to-end platform tested with real users. The easiest way to understand the scope is to break the platform into four distinct experiences that all had to work together seamlessly.

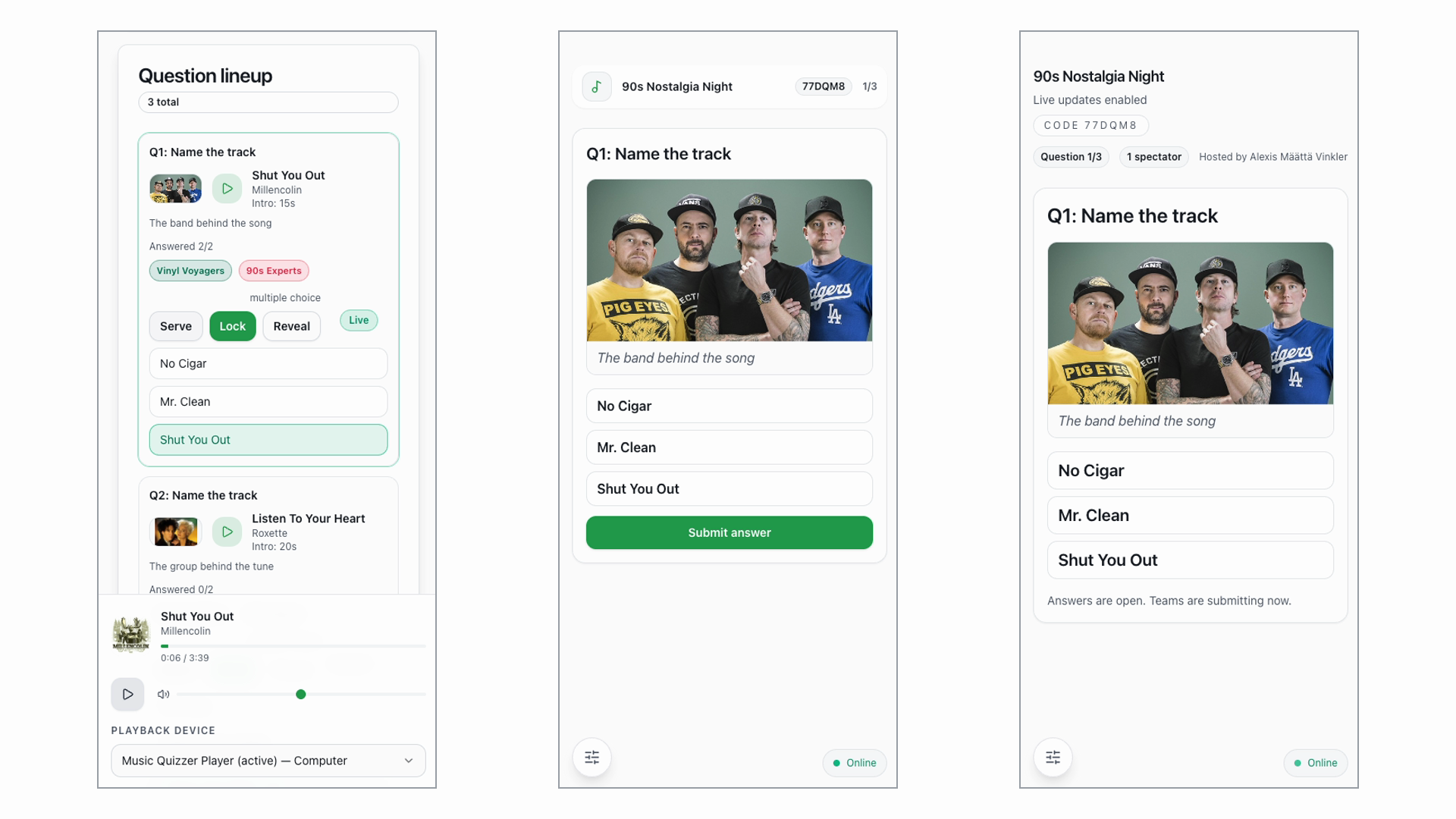

We built a Quiz Builder XP, a Live Host XP, a Live Contestant XP, and a Live Spectator XP. Each one is a big piece on its own — where the three latter needed to be reliable at the same time during a live session.

Here is a breakdown of the most prominent features within each experience.

Quiz Builder (host setup)

- Host authentication via Spotify single sign-on (OAuth).

- Playlist synchronization and track preview from the host’s Spotify account.

- Remote playback control with intro playback and fade-out handling.

- Quiz creation using Spotify tracks, prompts, and structured answers.

Live Host (DJ-style control)

- Live quiz orchestration using join codes, phase control, and answer reveals.

- Room pacing control for serving questions, locking answers, and triggering reveals.

- Scoreboard visibility management aligned with room energy.

- Answer reveal timing controlled explicitly by the host.

Live Contestant (team play)

- Instant game entry via short join codes or QR links.

- Team creation and member management during live play.

- Persistent team tokens enabling seamless rejoin after device dropouts.

- Digital answer submission with immediate confirmation.

- Live scoreboard updates and answer reveals triggered by the host.

Live Spectator (big screen + companion)

- Dedicated spectator view tailored for big-screen and companion-device support for shared viewing.

- Side-by-side team answer reveals during result phases.

Live flow & pacing

- Continuous play without reloads driven entirely by host pacing.

This is the kind of scope that usually explodes a timeline. But we did it in a week — as a two-man army empowered by AI!

The three live perspectives: host, contestant, and spectator showed side-by-side.

Why this normally takes 2-3 months

If you have built any software, particularly B2C, with third-party integrations and real-time client/server coordination, you know why.

The time sinks are real and predictable — they show up as recurring sources of complexity and failure risk:

- Integrating third-party OAuth, with strict redirect rules, token lifecycles, and hard-to-test edge cases.

- Implementing reliable playback control, going beyond “play” to handle device selection, timing, and transitions.

- Coordinating multiple live user roles, all requiring continuous real-time synchronization.

- Building real-time infrastructure, for presence, shared state, and scoreboard broadcasting.

- Managing session and identity lifecycles, across join codes, team tokens, and rejoin flows.

- Guaranteeing live-session reliability, where failures cannot be mitigated with manual fallbacks.

These are the exact forces that usually stretch a “cool idea” into a multi-month build.

How we compressed the timeline to one week

The short answer is AI-assisted development done right. The longer answer is a shift in mindset and how we work.

-

We prohibited ourselves from touching the codebase. All development had to be done by AI agents, be it frontend or backend.

-

We used AI as a real teammate. Not a novelty. A daily collaborator for scaffolding, wiring, and cross-checking. This removed days of boilerplate and allowed us to focus on product clarity and UX.

-

We built vertical slices, not components. Each slice went from UI to API to data. That is how you get a working system, not a pile of half-built parts.

-

We leaned on modern managed services. Supabase for auth and real-time, edge functions for a clean API surface, Spotify APIs for playback. This is not new. What is new is how quickly you can ship when you combine these with AI-driven implementation.

Proof it works: a live test

We ran a full live quiz on January 17, 2026 with 26 participants split over seven teams. The quiz ran for two sessions totaling roughly two and a half hours. The system remained stable and did not require a fallback to analog tools.

We still uncovered UX issues (for example, some screens required a manual refresh early on), but the core technical feasibility was validated.

The headline: the product held up in the real world, in a live social setting, under real-time pressure!

The enablers behind the speed

This wasn’t “faster coding.” It was a different development loop. We combined AI-native tooling with an automated delivery pipeline so the distance between an idea and a live test collapsed.

Here’s what powered the week:

- VS Code + ChatGPT Codex for in-context co-building and rapid iteration.

- Chrome MCP integration so AI agents could inspect the running app directly, not through screenshots or manual notes.

- Supabase MCP integration for direct access to auth, data, and real-time systems without manual context gathering.

- Supabase edge functions + Spotify APIs to ship production-grade auth and playback without building infrastructure from scratch.

- An automated pipeline across local → staging → production. A frontend, backend, or even a database change could deterministically reach prod in minutes.

The contrast to traditional development is the point: less human intervention in coding, environment management, and validation steps because the AI-native workflow handles all processes.

The real takeaway for software teams

This is not about music quizzes. It is about the new velocity ceiling for teams that embrace AI-driven development.

A week used to be enough time to:

- align on architecture

- write a few endpoints

- maybe ship a landing page

Now a week can be enough time to:

- build a complete, integrated product

- prove it in a live environment

- decide what is worth investing in next

The constraint shifts from “can we afford to build it?” to “can we test this technically, end to end, and learn from it?”

If you are in software, this changes your job

You can fight it. Or you can use it.

In‑context agentic AI development does not remove the need for craft, judgment, or design, but it moves you beyond vibe‑coding.

By calling the shots on architecture, guardrails, and release flow, we, as developers, can stay in control, ensuring production-grade delivery loops, while leveraging the high-paced development AI agents provide.

That is how you win in 2026!

If you want a detailed walkthrough of the AI stack, or would like to host a quiz of your own, reach out! 😉

Or, if you are fine with just the bigger lesson: start small, ship fast, and let AI handle the heavy lifting!